Disaster recovery (DR) is one of those old-school IT topics that seems to have fallen out of favor. The feeling seems to be that we’re safe because the public clouds where we host our workloads can’t go down. This is an illusion.

DR is still critically important, even though we aren’t all running centralized mainframe computers anymore. If anything, it has become a new and special challenge. You now have to worry about what you’ll do if the Internet itself goes down.

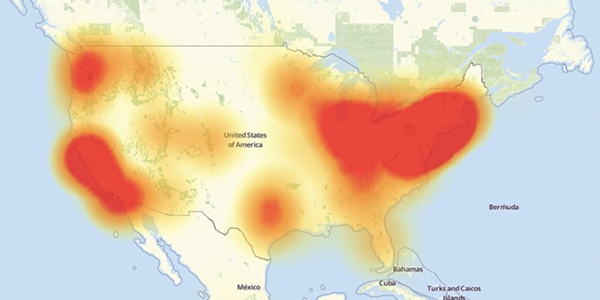

In the past year, we’ve seen several massive Internet failures. The DDoS attack against Dyn DNS services made thousands of organizations completely unreachable for many hours. The S3 failure at AWS earlier this year, and several smaller failures and DDoS attacks at cloud hosts around the world, prove it’s a serious concern.

And don’t forget your WAN. If it’s primarily VPN-based, you should think about what would happen if one or more of the key links failed or suffered a denial of service attack. (Note that I’m discussing a denial of service attack as if it were a natural disaster. That’s because it might as well be.)

The key to any good DR plan is to think about what can go wrong and come up with a contingency. DR for cloud services can get a bit more complicated than having a bunch of backup tapes at a cold standby site, and the DR testing scenarios are more involved than they used to be.

In this article I try to help you to ask the right questions about how to create a disaster recovery plan for your cloud and MSP services.

The disaster recovery questions to ask

At a high level, these are the questions I’d want to answer when building a DR plan for cloud-based services:

- How will you recover your compute resources?

- Are there other key IT infrastructure elements like voice or video?

- How will you recover your production data?

- Do you share production data with third parties?

- How will you recover access to your compute resources and data?

- For internal? For customers?

- How will you recover critical communications resources?

- Inbound as well as outbound voice, and don’t forget about call centers.

- How will you recover your internal communications?

- What are the site-to-site voice, video, and data flows?

Don’t plan for smoking craters

Nearly every DR plan I’ve ever seen uses a disaster scenario that I call the “smoking crater.” The data center is gone, burned to the ground, hit by an asteroid, a tornado, an earthquake, a tsunami, or whatever your favorite disaster movie is. The smoking crater DR plan usually assumes nothing in the production data center is available. All applications, all databases, all servers are down.

There are several problems with a smoking crater DR plan.

First, this kind of disaster is extremely rare. I can’t remember the last time a data center actually burned down. If the facility is run in a remotely competent way, it will have many levels of power redundancy including UPS and generators. It will have sophisticated fire suppression mechanisms. Disasters that take out whole data centers are generally the result of poor planning.

Second, when there really is a smoking crater where your stuff used to be, people tend to be pretty forgiving. Think back to the days immediately after the 9/11 terror attacks. Nobody expected those companies to rebuild their IT infrastructure in minutes or hours. If there’s a real smoking crater, you don’t need a disaster recovery plan. You have more important things to worry about.

Third, customers care a lot more about the smaller disasters. Imagine a bank whose mainframe loses its marbles because of a bad software update, or whose central customer database gets corrupted. Imagine a medical insurance company that suddenly can’t process claims on one type of policy or an airline that can’t take bookings. In these types of scenarios, the company just looks bad and customers leave.

Instead of planning for a large hole in the ground, consider a more fine-grained DR plan in which you can separately recover individual applications or related groups of applications and services. It’s much harder to do, but you might find significant business benefits.

Recovering compute resources and data

The AWS S3 failure in February 2017 caused serious problems for a huge number of customers. Many of those customers weren’t even using AWS directly. They were using MSPs who were using AWS.

Cloud providers and hosting facilities can go down, even big international ones with good reputations. Assume that wherever you host your data and applications, it can be disrupted.

All of the big providers like AWS, Azure, and OVH have backup data centers in other locations. Consider subscribing to the services that will allow them to move your workloads to a different data center.

And it doesn’t do any good protecting the compute resources if you don’t have current production data as well. So also make sure your data is being replicated between these facilities in as near to real-time as is practical.

In AWS EC2, you can build this fairly easily. Provision at least a minimal version of your environment in a different availability zone than the one where your primary workloads are running. Then, use some sort of data replication in the DR availability zone so your production data is kept up to date.

You also need a way to swing your production to the backup site. A good way to do this is by changing DNS records to point to the DR IP addresses.

There are similar features in Azure and other large cloud services.

Recovering access to the compute resources

How will your users access your compute resources during a disaster? When I say “users” I mean both internal and external users, employees, and customers.

If your customers need direct access to your systems, then make sure the DR systems look exactly the same as the production systems. The easiest way to do this is to repoint public DNS entries to the DR servers.

Suppose you have a website hosted at a cloud provider. When a disaster is declared, you want to move all the related systems to the cloud provider’s site halfway around the world.

And let’s suppose that you’ve managed to replicate all of your production data. There are three ways to move your DNS entries to the new IP addresses at the DR site.

- If you have a DNS hosting provider, log into your account with the hosting provider and specify the new IP addresses. DNS responses include a TTL (Time to Live) parameter that specifies how long a device will cache your DNS query responses. If you want to use this method, choose a suitably short TTL value. Otherwise, your customers will just keep using the old IP addresses and getting no response.

- Take advantage of a cloud WAF or DDoS protection service. In this case, you’d log into your WAF or DDoS provider account and specify the new IP addresses. This has the benefit of changing immediately, as well as the obvious security benefits of the WAF or DDoS protection.

- Use a global load balancer. This is essentially a DNS host that polls your primary and backup servers and automatically flips to the backup if the primary is unreachable.

As an aside, don’t forget the possibility that your compute resources are fine and the real problem is that your DNS provider is offline. Remember the 2016 DDoS attack against Dyn DNS. Most of the organizations that used Dyn to host their DNS records found themselves unreachable, even though there was nothing the matter with their own systems.

Consider having multiple DNS providers. In this case, of course, executing your disaster recovery plan in situations where the primary systems are offline requires changing the entries at all DNS providers. Also, make sure to ask your DNS providers where they’re hosted to ensure there are no failures that can affect them simultaneously.

Recovering critical data flows

Many businesses have critical data that includes both internal and external sources or destinations. For example, you might need current stock prices. You might need to process insurance claims with third parties. You might need to process payments through banks or other financial clearing companies.

In all of these cases, you’ll want to ensure your backup servers have a way of accessing these external sources and destinations. Similarly, you should provision some sort of secondary path to the external parties from the primary servers. This way you don’t have to invoke the DR plan just because a circuit to a third-party server is down.

Note that in a pure active/standby configuration, you probably don’t need redundant circuits to your third parties from the standby site. Those backup circuits at the backup site would only be used in the event of multiple simultaneous failures. Usually we assume you can neglect the probability of multiple simultaneous failures. However, you should assess these risks for your specific requirements.

Recovering internal communications

Finally, look over your entire internal communications product suite. By this I mean things like internal email or messaging services, telephony and video conferencing. How do these services work? Do they rely on Internet-based service providers? Do they rely on VPN connections between sites?

If you’re using cloud-based services for internal communications, it’s probably for cost reasons. It won’t be cost-effective to build a whole expensive internal infrastructure for backup purposes. However, you can still plan your way around this type of contingency.

For example, during a disaster you might decide to use cell phones for outbound phone calls. And you might decide video conferencing isn’t a critical service during a disaster.

Two important considerations for telephony in particular are inbound calling and inter-office communications. If you plan to use cell phones, for example, then you’ll need to make sure you have a current set of cell phone numbers for all of your locations. The list needs to be maintained. But you almost certainly don’t want to give out cell phone numbers to the general public, so this isn’t a great solution for inbound calling.

Another common solution to the inbound calling problem is to put a small number of traditional analog phone lines at each location that can receive calls from customers and the public. Then, talk to your telephony service provider and find out if they can redirect your public phone numbers to these analog lines in an emergency.

If you have a call center, you’ll probably need more elaborate and robust disaster recovery plans. For example, your call center DR plan might involve redirecting your inbound calls to an external call center service who might have limited functionality.

Testing and automation

Traditional IT disaster recovery plans typically involve annual testing exercises. These are inevitably gigantic pantomime shows created to please boards and auditors but generally so divorced from reality that they’re of little or no use in a real disaster. So when I talk about testing your DR, I’m not talking about such an exercise.

Instead, I advocate doing smaller targeted tests. For example, you might have a test to swing your production cloud services from one AWS zone to another and verify that the applications still work. Similarly, if you have a backup for your WAN, either using VPNs or migrating from VPN to another technology, you can do an isolated test of that.

Ideally, every system for which there’s a backup should have a test to ensure the backup can take over when it’s required.

Disaster recovery purists will cry foul at this point. They’ll say you need integrated testing to simulate a real failure. There’s some truth to this. If a real failure scenario involves the complete loss of a data center (or a cloud availability zone), then an isolated test might inadvertently make use of functions that wouldn’t be available in that real failure.

So, once you’ve completed isolated testing of all the individual component systems, you should also do some large-scale disaster scenarios. However, these scenarios and the recovery methods need to be realistic. You can’t just lump together a whole bunch of unrelated systems and pretend some fictional disaster has taken these and only these systems down.

To help make the scenarios realistic, look at recent incidents such as:

- DDoS attacks against a key service provider

- DNS servers inaccessible

- Cloud storage inaccessible

- Cloud availability zone or data center unreachable

- Targeted attack against your organization’s Internet presence

There are of course many other potential incidents, but try to keep the scenarios realistic. Sure, there’s always the possibility that a zero-day vulnerability in the firewall system you use will suddenly be exploited worldwide, but I can’t remember anything like that ever happening in the past, so it’s probably pretty unlikely.

And if it did happen, you’d be in excellent company. It’s likely there would be a lot of help available.

As you do these tests, it will become immediately obvious what needs to be automated. If you need to move hundreds of VM servers between data centers, or bring up a bunch of VPNs all at once, you need automation. These things can’t be done by hand in a reasonable time.

Personally, I like everything to be automated. However, this doesn’t mean I want the automation to proceed without supervision.

Most traditional DR plans require a conscious management decision to trigger the recovery efforts. You don’t want a short-term technical blip or instability to cause your production operations to flip back and forth between production and DR facilities. That could be more disruptive than just suspending operations for a few minutes until everything has stabilized. It’s a business decision.

In networking, we’ve been incorporating backup links and redundant paths for decades. So there are highly robust routing protocols and testing methods for detecting failures and redirecting traffic through backup paths. Use them.

If you need to implement some global NAT rule to allow a DR server to look like the production server or to bring up a bunch of backup VPNs, then have a way of globally triggering these changes. You don’t want to have to log into a hundred firewalls during a disaster. It has to be fast and simple.

The same goes for moving servers. Your DR plan can’t rely on building servers from scratch. You also can’t be moving terabytes of data across WAN links. The data should already be there. Use whatever technology is appropriate to keep it synchronized.

Recovery time and recovery point

The previous section on testing and automation brings us around to what should be a critical business question in creating your DR plans: recovery time objective (RTO) and recovery point objective (RPO).

RTO is the amount of time it will take to get your production systems back online in whatever recovery mode is appropriate. It’s It’s how long you’ll be down, as seen by the system’s end users.

RPO is a measure of how recent the data should be when you get back online. If your DR plan involves recovering from last night’s automated backup, then your RPO is 24 hours. If that’s not good enough, you need some way of copying your current live data to the DR systems more frequently.

Highly mission-critical systems do things like replicating every transaction in real time, but the mechanisms depend on what the data is and how the application processes it.

RTO and RPO values are ultimately business decisions. But the better the recovery, the higher the cost, and cost is a critical part of this decision. Your job is to come up with two or three detailed plans with accompanying prices so negotiations can be based on reality.

Final thoughts

Disaster recovery planning is always a point-in-time exercise. That means it has to be kept up to date.

Incremental changes like a new remote office or a new virtual server should be accommodated automatically. But you should be regularly reviewing the operational infrastructure of your organization to ensure the DR plan will recover your most critical business functions. This will also require a good systems inventory, which is something you should have for operational reasons anyway.

It’s usually not necessary to recover everything. There are always relatively unimportant functions that can go away without significantly affecting the business. Assume that if the disaster goes on for more than a day or so, you’ll have the opportunity to start building those less important functions from scratch again.

I strongly advocate a fine-grained disaster recovery approach in which related groups of systems have a common recovery mechanism. I also recommend testing these recovery mechanisms regularly.